Astrobee ISS Free-Flyer Datasets for Space Intra-Vehicular Robot Navigation Research

RA-L 2024 (IROS 2024)

Abstract

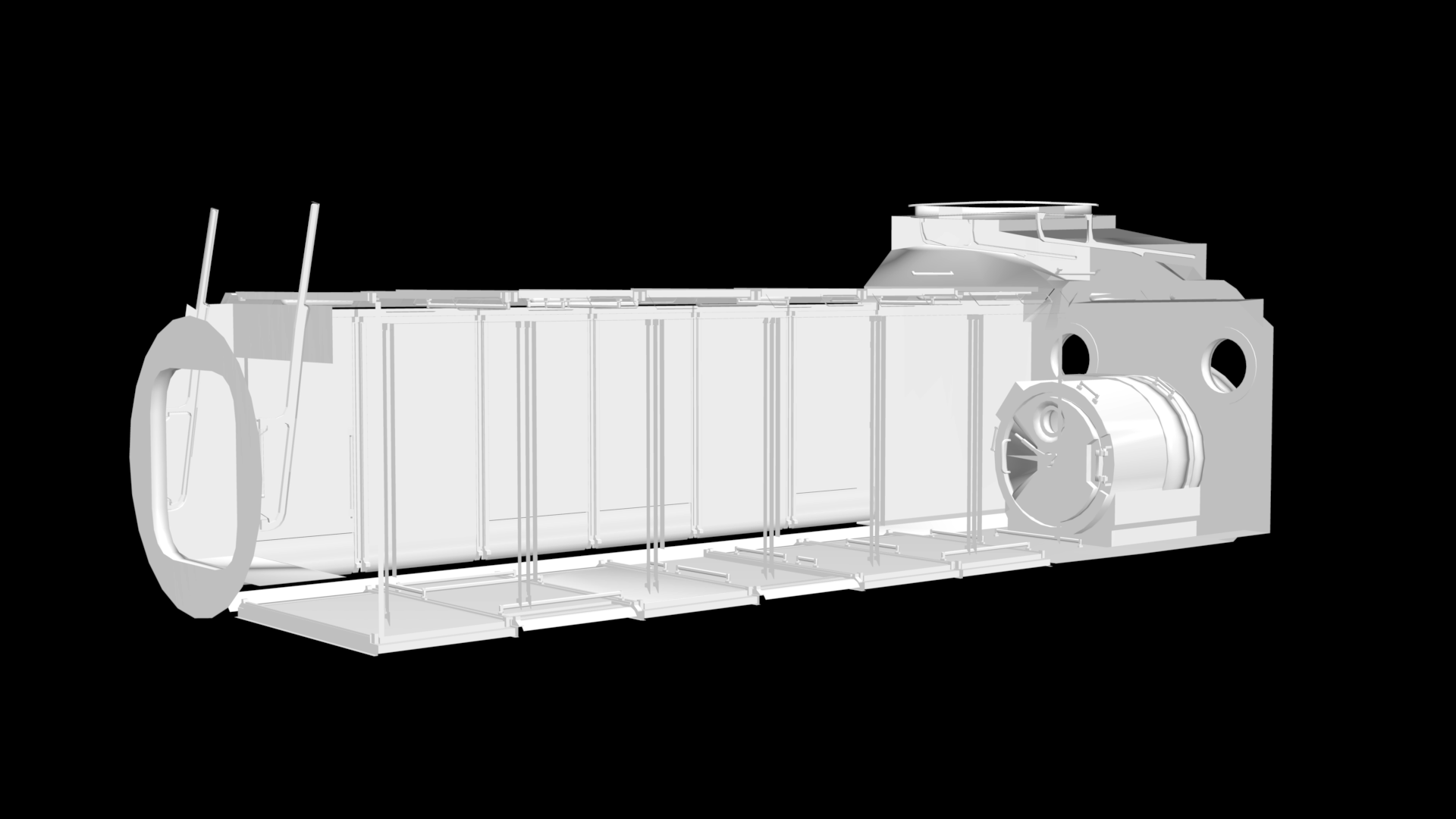

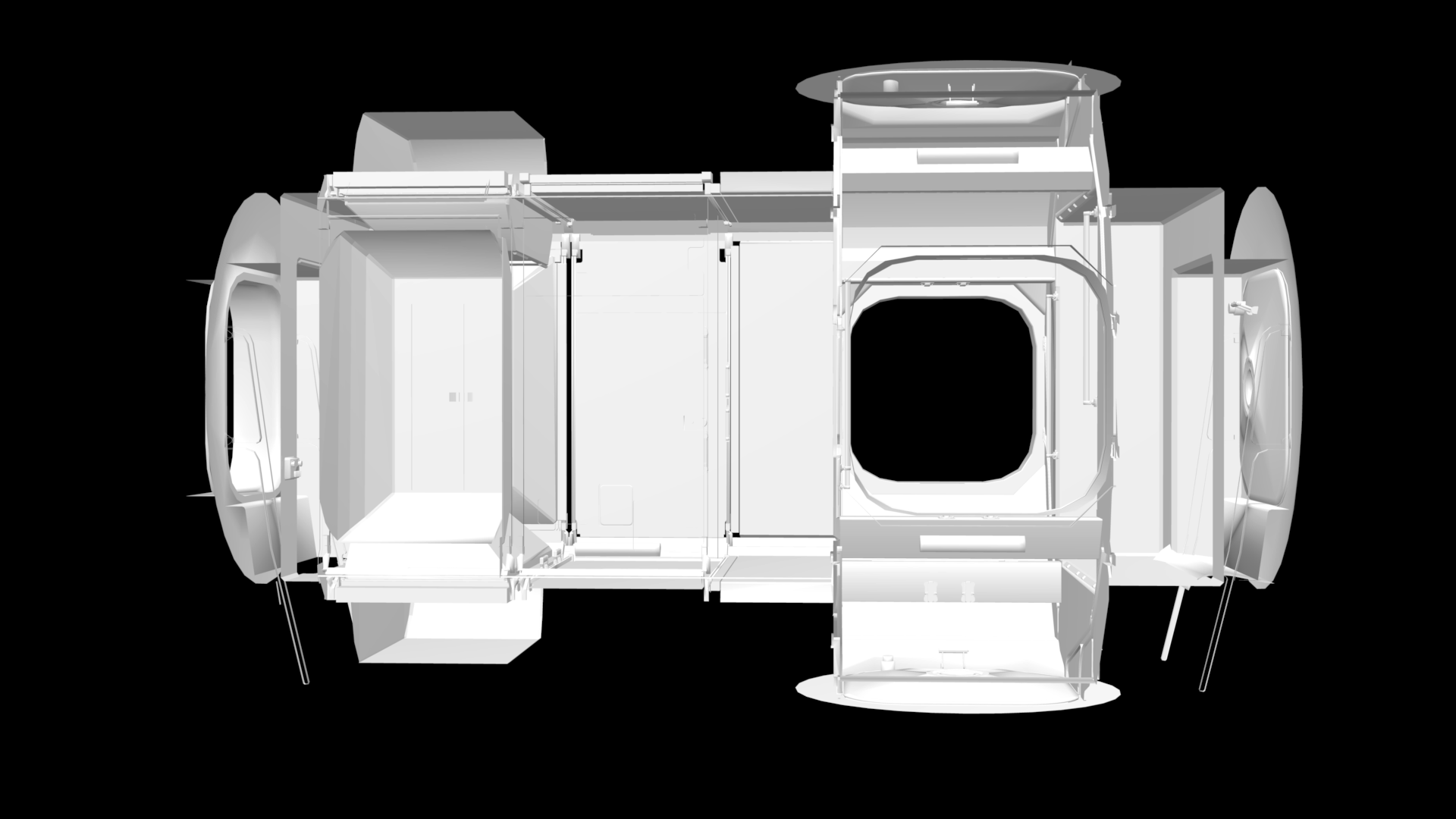

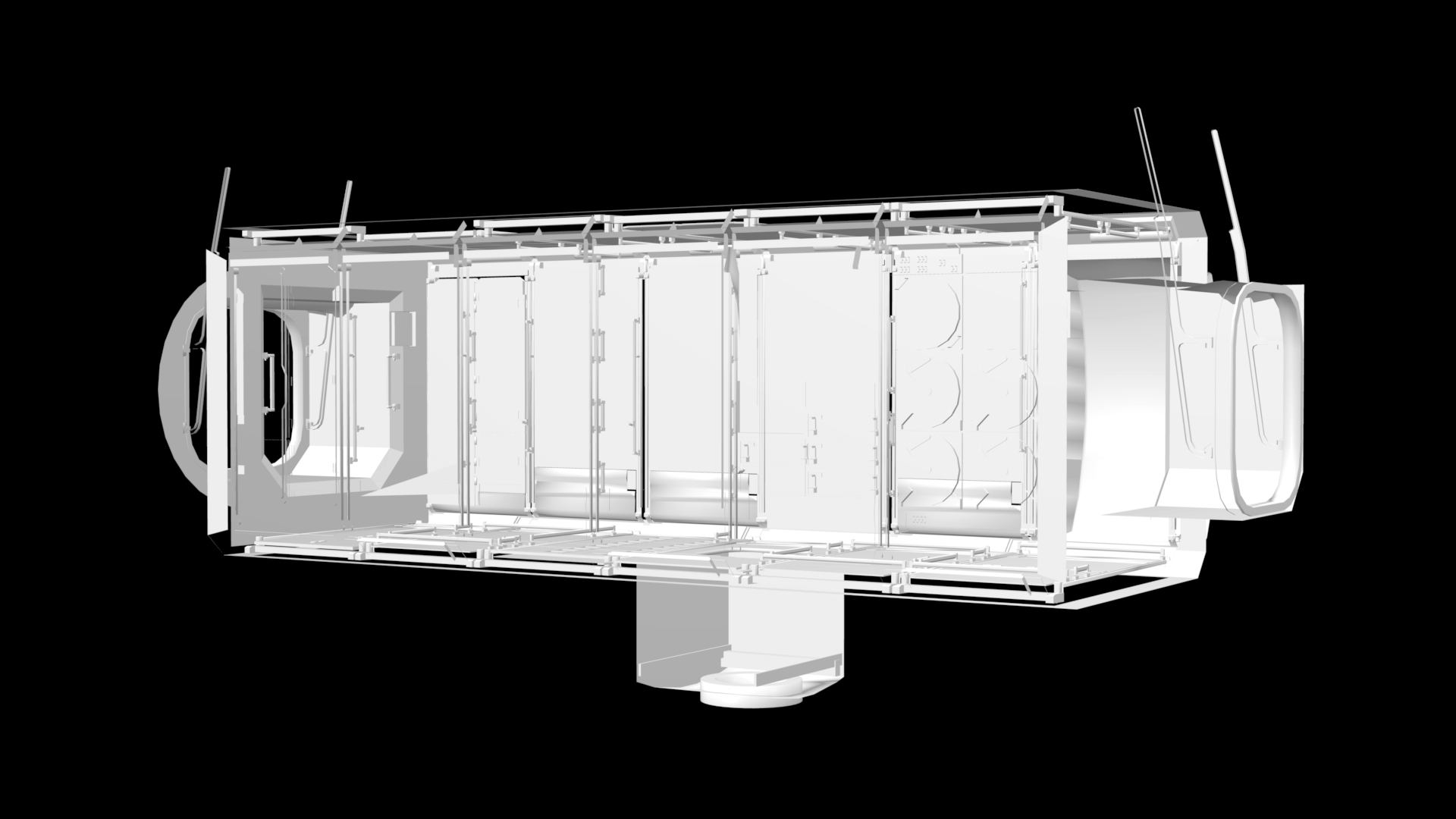

We present the first annotated benchmark datasets for evaluating free-flyer visual-inertial localization and mapping algorithms in a zero-g spacecraft interior. The datasets were collected by the Astrobee free-flying robots that operate inside the International Space Station (ISS). Space intra-vehicular free-flyers face unique localization challenges: their IMU does not provide a gravity vector, their attitude is fully arbitrary, and they operate in a dynamic, cluttered environment. We extensively evaluate state-of-the-art visual navigation algorithms on these challenging Astrobee datasets, showing superior performance of classical geometry-based methods over recent data-driven approaches. The datasets include monocular images and IMU measurements, with multiple sequences performing a variety of maneuvers and covering four ISS modules. The sensor data is spatio-temporally aligned, and extrinsic/intrinsic calibrations, ground-truth 6-DoF camera poses, and detailed 3D CAD models are included to support evaluation.

Download

Our datasets consist of various intra-vehicular activities of Astrobee free-flying robots currently operating on the ISS between May 13, 2019 and July 14, 2022. The datasets are collected inside the largest single ISS module, the Japanese experiment module (JEM) nicknamed Kibo, and contain ground-truth 6-DoF camera poses.

| sequence | duration(s) | Download | Original bag file |

|---|---|---|---|

| td_roll | 63 | link | link |

| td_pitch | 75 | link | link |

| td_yaw | 50 | link | link |

| td_dock | 98 | link | link |

| sequence | duration(s) | Download | Original bag file |

|---|---|---|---|

| cal_checkerboard | 460 | link | link |

| cal_ARtag | 495 | link | link |

| sequence | duration(s) | Download | Original bag file |

|---|---|---|---|

| iva_kibo_trans | 229 | link | link |

| iva_kibo_rot | 196 | link | link |

| iva_hatch_inspection1 | 403 | link | link |

| iva_hatch_inspection2 | 521 | link | link |

| iva_watch_queenbee | 236 | link | link |

| iva_robot_occulusion | 192 | link | link |

| iva_ARtag | 62 | link | link |

| iva_badlocal_rotation | 313 | link | link |

| iva_badlocal_descend | 244 | link | link |

| sequence | duration(s) | Download | Original bag file |

|---|---|---|---|

| ff_return_journey_forward | 402 | link | link |

| ff_return_journey_up | 413 | link | link |

| ff_return_journey_down | 398 | link | link |

| ff_return_journey_left | 303 | link | link |

| ff_return_journey_right | 328 | link | link |

| ff_return_journey_rot | 108 | link | link |

| ff_JEM2USL_dark | 32 | link | link |

| ff_USL2JEM_bright | 92 | link | link |

| ff_nod2_dark | 296 | link | link |

| ff_nod2_bright | 162 | link | link |

We also offer detailed 3D CAD models for evaluation. You can download the 3D CAD models for the JEM, USLab, and Node 2 modules. For more CAD models of various modules, please refer to the original source link

Dataset Format

We provide each Astrobee sequence in the TUM RGB-D format in the following format:

- description.yaml: a text file containing an overall description of the sequence (the name of the robot used, the original rosbag file, and the date of recording, etc.)

- gray/: a folder containing all undistorted gray images (PNG format, 1 channel, 8-bit per channel)

- gray_raw/: a folder containing all raw (distorted) gray images with FOV lens distortion

- gray.txt: a text file with a consecutive list of all gray images (format: timestamp filename)

- undistorted_calib.txt: a text file containing camera intrinsic parameters for undistorted gray images (format: fx fy cx cy)

- distorted_calib.txt: a text file containing camera intrinsic parameters for distorted gray images with FOV lens distortion coefficient (format: fx fy cx cy w)

- imu.txt: a text file containing the timestamped gyro and accelerometer measurements expressed in IMU body frame (format: timestamp wx wy wz ax ay az)

- groundtruth.txt: a text file containing the ground-truth 6-DoF camera poses for all gray images stored as the timestamped translation vector and unit quaternion expressed in ISS world coordinate frame (format: timestamp tx ty tz qx qy qz qw)

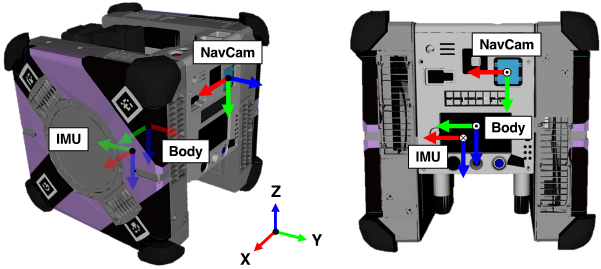

Astrobee Platform

The Astrobee free-flying IVA robots used for dataset collection. This is the positions of the sensors and the rigid body transformations that link them.

- NavCam provides monocular image sequences at 5 Hz.

- IMU sensor is logged at 100Hz and expressed in IMU body cam.

Notice

- Supplementary materials

- A simple MATLAB code for parsing datasets is available.

- For four sequences(iva_hatch_inspection1, iva_hatch_inspection2, iva_watch_queenbee, iva_robot_occulusion), certain occlusions and limited viewpoints make generating ground truth challenging. Instead, we provide an AstroLoc trajectory as a baseline.

- The current maintainers are Jungil Ham (jungilham@gm.gist.ac.kr) and Suyoung Kang (suyoungkang1222@gmail.com).

Citation

If you use this work or find it helpful, we will be happy if you cite us! : (bibtex)

@article{kang2024astrobee,

title = {Astrobee ISS Free-Flyer Datasets for Space Intra-Vehicular Robot Navigation Research},

author = {Kang, Suyoung and Soussan, Ryan and Lee, Daekyeong and Coltin, Brian and Vargas, Andres Mora and Moreira, Marina and Hamilton, Kathryn and Garcia, Ruben and Bualat, Maria and Smith, Trey and others},

journal = {IEEE Robotics and Automation Letters},

year = {2024},

publisher={IEEE}

}